Automating ETL Testing with PySpark and AWS Glue: A Step Towards Efficiency and Accuracy

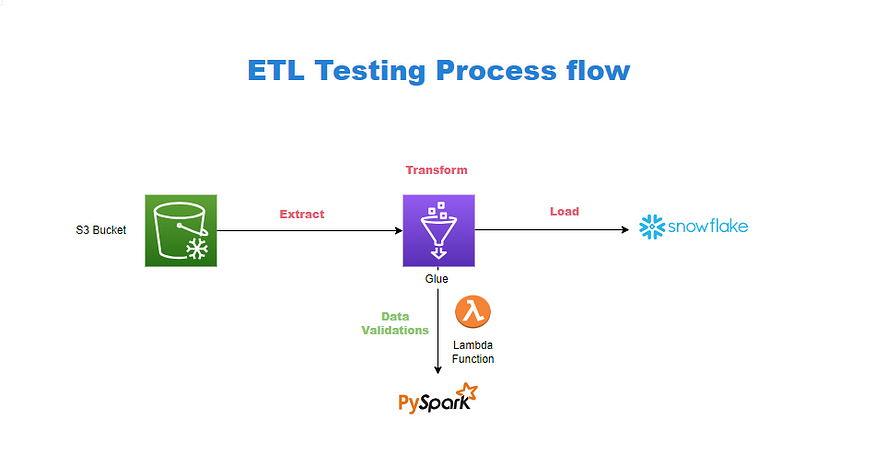

In today’s fast-paced data-driven environment, ensuring the integrity and accuracy of data is paramount. However, manual ETL (Extract, Transform, Load) and data testing methods often fall short, leading to time-consuming processes and increased susceptibility to human error. At Optisol Business Solutions, we recognized these challenges faced by our clients and proposed an automated solution using PySpark integrated with AWS Glue, aiming to revolutionize the ETL testing process.

Current Challenges in Manual ETL Testing

In the existing setup, our client relies heavily on manual ETL and data testing processes. This traditional approach presents several issues:

- Time-Consuming: Manual testing significantly slows down the entire ETL pipeline, delaying data availability for analytics.

- Prone to Human Error: The risk of errors is high, which can lead to inaccurate data validation and compromised data quality.

- Limited Validation: Comprehensive field-level validation is challenging to achieve manually, affecting the overall data integrity and reliability.

The Proposed Solution: Automating ETL Testing with PySpark

To address these limitations, we proposed an automated ETL testing solution leveraging PySpark’s robust data processing capabilities, integrated seamlessly with AWS Glue. This solution focuses on several key validation checks to ensure data integrity and accuracy:

- Record Count Validation: Ensures the number of records in the source matches the target, identifying any discrepancies early in the process.

- Field-Level Validation: Verifies that individual fields/columns are correctly transferred and transformed, maintaining data consistency.

- Null Validation: Checks for the presence of null values where they shouldn’t exist, preventing data quality issues.

- Duplicate Value Check: Identifies and flags duplicate records, which could compromise data quality.

- Schema Validation: Confirms that the schema of the data in the source and target are consistent, ensuring structural integrity.

Integrating PySpark with AWS Glue: Step-by-Step Process

Integrating PySpark with AWS Glue involves several key steps that ensure smooth data extraction, transformation, validation, and loading processes.

1.Set Up AWS Glue Environment:

- Create an AWS Glue Data Catalog to store metadata for your data sources.

- Define Glue Crawler to scan your data in S3 and populate the Data Catalog.

- Create Glue ETL Jobs where the PySpark scripts will be executed.

2.Data Extraction:

- Use AWS Glue to connect to the data source in S3.

- Define the data schema and map the data source to the Glue Data Catalog.

3.Transformation and Validation Using PySpark:

- Write PySpark scripts to perform the necessary transformations and validations.

- For Record Count Validation: Compare the count of records between the source and the target.

source_count = spark.read.csv("s3://source-bucket/data.csv").count()

target_count = spark.read.format("snowflake").options(...).load().count()

assert source_count == target_count, "Record count mismatch"- For Field-Level Validation: Check if the fields are correctly transferred and transformed.

source_df = spark.read.csv("s3://source-bucket/data.csv")

target_df = spark.read.format("snowflake").options(...).load()

assert source_df.schema == target_df.schema, "Schema mismatch"- For Null Validation: Ensure there are no null values in critical fields.

null_count = source_df.filter(source_df["important_field"].isNull()).count()

assert null_count == 0, "Null values found in important_field"- For Duplicate Value Check: Identify any duplicate records.

duplicate_count = source_df.groupBy("unique_key").count().filter("count > 1").count()

assert duplicate_count == 0, "Duplicate records found"- For Schema Validation: Verify the schema consistency between the source and target.

assert source_df.schema == target_df.schema, "Schema mismatch"4.Loading:

- After successful validation, load the transformed data into the target Snowflake database.

source_df.write.format("snowflake").options(...).save()5.Result Storage:

- Generate validation results and store them in an S3 bucket.

validation_results.toPandas().to_csv("s3://result-bucket/validation_results.csv", index=False)Benefits of Automated ETL Testing

Implementing this automated ETL testing solution offers several key benefits:

- Time Efficiency: Automation significantly reduces the time required for testing, allowing for quicker turnaround and more frequent testing cycles.

- Accuracy and Consistency: Automated tests minimize human error, ensuring more reliable and consistent validation results.

- Comprehensive Validation: Field-level validation and other checks ensure a thorough examination of data integrity, which is challenging to achieve manually.

- Scalability: The solution can easily be scaled to accommodate larger datasets and more complex validation requirements without a proportional increase in testing effort.

- Cost Savings: Reduced manual effort translates to lower labor costs and resource allocation for testing activities.

Conclusion

By leveraging PySpark and AWS Glue to automate the ETL testing process, Optisol Business Solutions addresses the limitations of manual testing, providing a solution that enhances efficiency, accuracy, and comprehensive data validation. This integration ensures that data integrity is maintained consistently across the ETL process, leading to better data quality and operational efficiency. With data sources in S3 and the target in Snowflake, this solution significantly enhances the overall data management strategy for our clients.

The automated ETL testing solution not only meets the current needs but also lays a scalable foundation for future data validation requirements, ensuring reliable and high-quality data outcomes for all analytical endeavors.

No comments:

Post a Comment